Deepfake - A Scam so Surreal

Fake news is old news, but now it looks different.

It’s hard to think of moral uses for technology built to deceive.

Deepfake technology is the most surreal form of media manipulation we’ve ever seen, one which threatens the trust we place in our own perception.

When we have reason to doubt our own senses, verification becomes near impossible.

The absence of trust can be equally as damaging as that which is misplaced, making a polarised society take sides on which is which.

The information era brought psychological warfare into our homes. Disinformation, or the mere possibility of it, means we now fight to filter truth from lies and advertisements.

One of the recent scams highlighted this growing issue of digital trust and identity.

DeTrade Fund presented themselves as a community driven project that would provide users with access to their CEX arbitrage bots, providing they owned enough of the native token DTF.

DTF was sold in a private sale which raised 1,438 ETH before the company subsequently vanished with the funds.

To build trust from their users, this scam focused on building a fake identity with both on and offline presence. They registered a business with Companies House and arranged news releases on several platforms where they would repeatedly show the face and name of their CEO “Mark Jensen”.

In a market full of anonymous online only projects, DeTrade stood out with their “real world” identity. The scammer capitalised on the trust that we place in the human face.

After users found that the private sale had sold nothing but lies, attention turned to the promotional videos that DeTrade had published across social media.

An unusual performance by the supposed CEO of DeTrade attracted attention when a user suggested that he was in fact AI generated.

The full Twitter thread can be found here.

Rekt reached out to an industry expert (IE) for their opinion.

IE:

My name is REDACTED, I am the CEO of REDACTED. We develop proprietary machine learning based technologies to automate the creation and distribution of adult entertainment content, or in layman's terms we are building AIs to create porn. A large part of that involves working with deepfake technologies, and specifically how to insert AI generated people into videos in a photorealistic fashion through deepfakes.

rekt:

Can you tell us about your work in AI before you merged it with crypto?

IE:

Our team has over a decade’s experience in machine learning and computer vision working across all industries but primarily developing solutions for large scale agriculture and pharmaceuticals.

rekt:

Regarding the DeTrade video - what were your thoughts when you watched it for the first time?

IE:

There was a lot of speculation that it is “AI generated” or a deepfake, or both. I’ve looked it over a few times now and while it’s possible it is a deepfake of a real person, the person in the video is almost certainly not AI generated. I think it’s important to understand the difference between these two technologies.

We at REDACTED have trained a GAN to generate our own people who do not exist. That is something fundamentally different from a “deepfake”.

rekt:

Please can you explain the difference between AI generated and deepfake?

IE:

Well to be clear, deepfake technologies do use AI. However deepfakes as a technology do not involve training an AI to create a new persona. Instead deepfakes take an already existing set of data of a person's face, and overlay it on top of another person's face in an already existing video. If done right what you should get is a photorealistic and convincing "faceswap" where you have a video of a person doing something they did not in fact do. So in sum, deepfakes do not create a new person at all, but rather involve overlaying an already existing face into another video.

On the other hand an "AI generated person" whether that person is represented as a video or a still image, involves an entirely separate kind of machine learning in which a large amount of data showing photos of different people are given to an AI (usually a Generative Adversarial Network or 'GAN') so that the AI begins to understand what a 'person' or a 'body' is. Once it has enough of this data, the AI can generate new iterations of people based on the data that it has already been shown. So this kind of AI is the exact opposite of deepfakes in terms of our current conversation, it cannot be used to insert a person into video but it can create new people out of thin air.

What I saw regarding DeTrade were accusations that the person in the video was AI generated, and possibly also deepfaked over an existing video of another person. I want to be clear that this is almost certainly not the case.

rekt:

What makes you so sure?

IE:

It's a matter of capabilities. There are maybe 5 or so companies in the world who can generate a new photo realistic person from nothing with an AI and then can also insert that person into an existing video like the one we saw, to that degree of realism. I should know, we are one of those 5 companies. The others are all multi-billion dollar tech companies like Nvidia, or highly reputable AI startups with tens of millions of dollars in funding. The likelihood that c-suite execs at Nvidia are taking time out of their schedules to scam the DeFi community for a few million bucks seems very slim to me.

To be clear on this point, there are tons of people who could have taken the face you saw in that video from a video of a real person. Maybe a teacher giving a zoom class or something.

But the idea it's an AI generated face is nearly impossible.

rekt:

AI and deepfakes seem to be the perfect tool to increase paranoia, and many will think they do more harm than good, how would you respond to that?

IE:

I think frankly speaking, it's appropriate to be paranoid.

I think everyone needs to get used to the idea that just because you see a photo, or video, or audio of a person, it does not mean that person was really the one in the video or that the person even really exists.

Lots of people understand this intellectually, but then at the same time are looking for things like "non-anon teams" as if seeing a guy give a livestream or show a picture of himself with an ID provides them some extra layer of protection in their investment. This is not really the case.

Right now there are very few places where someone can spin a new person out of whole cloth and create a video of them. I'm proud to count our company as one of those places. But over time, these technologies always get easier and easier for amateur hobbyists or even organized scammers to replicate, and we are rapidly approaching that point.

It's as simple as taking into account the fact that without meeting someone face to face, you are never going to be able to know if they are real, or if the video you saw of them doing something was actually them or just a fake. In my opinion we are already at that point, and the proliferation of this technology makes it a guarantee that holding to this kind of philosophy will be more and more important as time goes on.

If you didn't shake their hand, you can't be certain someone exists. And if you didn't hold their ID card, you can't be sure their ID does either.

In the information age, when we rely so much on the accuracy of the media, we can no longer trust what we see or hear.

Innovation has always had its enemies; will our opinions of this technology change over time?

Perhaps in twenty years, we will realise our aversion to deepfakes was simply a case of technophobia, and that the societal good outweighs the bad. With the current press that the technology receives, this future is hard to imagine.

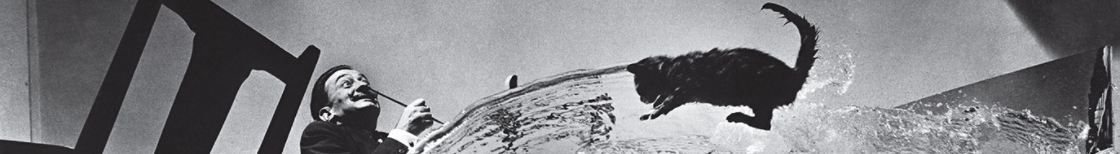

Salvador Dali once said: “Si muero, no muero por todo,” or “If I die, I won’t completely die.”

Thirty years after his death, his words take on a new meaning at The Dali Museum.

REKT serves as a public platform for anonymous authors, we take no responsibility for the views or content hosted on REKT.

donate (ETH / ERC20): 0x3C5c2F4bCeC51a36494682f91Dbc6cA7c63B514C

disclaimer:

REKT is not responsible or liable in any manner for any Content posted on our Website or in connection with our Services, whether posted or caused by ANON Author of our Website, or by REKT. Although we provide rules for Anon Author conduct and postings, we do not control and are not responsible for what Anon Author post, transmit or share on our Website or Services, and are not responsible for any offensive, inappropriate, obscene, unlawful or otherwise objectionable content you may encounter on our Website or Services. REKT is not responsible for the conduct, whether online or offline, of any user of our Website or Services.

you might also like...

Compounder Finance - REKT

The rekt reaper has a way of making people talk. In the final moments, when all TVL has gone, and the drawdown seems unbearable, a faceless figure enters the chat... Follow our anonymous author as he tracks down and interrogates the perpetrator of the $12M Compounder Finance rug pull.